Dynamicweb 9 Security

Dynamicweb is a web application serving web pages on a webserver. Overall there are 2 areas of security when hosting a Dynamicweb solution:

- Infrastructure tier security (Firewall, Windows, IIS, SQL-Server, ASP.NET and related technologies)

- Application tier security (The Dynamicweb application).

This document primarily covers the application tier security. For infrastructure tier security, please refer to the hosting provider.

The Dynamicweb application uses several different approaches to ensure that the software cannot be compromised – making it much more difficult to post unintended content, get access to data, gain access to restricted areas of the website, and to conduct Denial of Service attacks (DOS) against a solution.

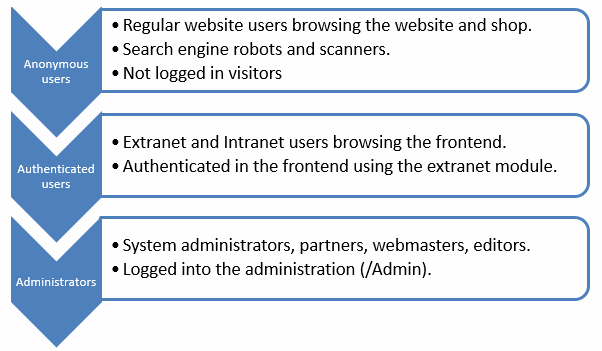

Dynamicweb has 3 layers of different user access control (UAC) (Figure 1.1).

Anonymous and Authenticated users can only browse the frontend of the website (/Default.aspx) – they have access to everything not placed in /Admin and in /CustomModules subfolders with the exception of /Admin/Public folder.

Administrators have access to the frontend and the backend (/Admin).

The Dynamicweb application security handles various types of attacks.

- XSS attacks (Cross site script attacks)

- HTML injection attacks

- SQL Injection attacks

- HTTP Header injections

- Cookie injections

- POST data attacks

- Data input validations

Dynamicweb has a number of checks to handle these types of attacks.

- Check if the current user has access to the specific area of the software. That would be either the administration (/Admin) or pages in the frontend with permissions. This check is made before any process of the request or incoming data is handled. The check is global and covers all requests going through the .NET engine. This is not applicable for i.e. files, i.e. /Files/Images/Picture.jpg.

- If user does not have access, show a login dialog.

- Scan all incoming data (Post, Get, Cookies, Headers) for SQL injection. A number of tests are executed against ALL the data being send to the application layer. This check is made before any process of the request or incoming data is handled. The check is global and covers all requests going through the .NET engine.

- If one of the test fails, Dynamicweb bans the IP for 15 minutes giving a 404 http status for all subsequent requests in that time period.

- Scan all incoming data (Post, Get, Cookies, Headers) for XSS and HTML injection.

- If one test fails, Dynamicweb returns a 404 http status for the request and stops all further processing of the request.

- Data is validated and converted to the proper data type to avoid bad data in the application.

- All data access is going through parametrized queries to avoid injections

Check 1-3 is done for all data going into the system regardless of how things are developed and covers both standard and custom modules and cannot be bypassed. Level 2+3 can be disabled by the system administrator though. Check 4 is done by each functionality or module in the system, and needs to be manually implemented in custom modules etc.

DoS & DDoS prevention

Considering 2 layers of DOS attack points – one at the application level and one at the network level –Dynamicweb has a built in application-level DOS protection policy and framework that detects malicious traffic and bans the IP from executing subsequent page views that will impact performance. This mechanism works on traffic that gets to the website from a single attacker. This protection however is not very powerful if the attack is distributed.

Considering DoS attack points at the network level, Dynamicweb does not contain DOS prevention mechanisms as this has to be handled by the security of the infrastructure tier by the service provider

DDoS mitigation is a set of techniques or tools for resisting or mitigating the impact of distributed denial-of-service (DDoS) attacks on networks attached to the Internet by protecting the target and relay networks. DDoS attacks are a constant threat to businesses and organizations by threatening service performance or to shut down a website entirely, even for a short time.

The first things to do in DDoS mitigation is to identify normal conditions for network traffic by defining “traffic patterns”, which is necessary for threat detection and alerting. DDoS mitigation also requires identifying incoming traffic to separate human traffic from human-like bots and hijacked web browsers. The process is done by comparing signatures and examining different attributes of the traffic, including IP addresses, cookie variations, HTTP headers, and JavaScript footprints.

One technique is to pass network traffic addressed to a potential target network through high-capacity networks with "traffic scrubbing" filters.

Manual DDoS mitigation is no longer recommended due to DDoS attackers being able to circumvent DDoS mitigation software that is activated manually. Best practices for DDoS mitigation include having both anti-DDoS technology and anti-DDoS emergency response services. DDoS mitigation is also available through cloud-based providers.

Data Security

The data in a Dynamicweb solution is located in the database and Dynamicweb uses a single account with CRUD and DDL rights to access and maintain the data and the data model.

End user permissions for the data in the database is handled by the application tier and not by the data model and should as such be configured hereafter to ensure that unwanted access to the server and database can be restricted.

Dynamicweb can encrypt passwords on the user database by configuration. It is strongly recommended to use the SHA512 hashing algorithm.

Some Dynamicweb apps and modules can be configured to save data on disk in CSV files etc. These files can contain sensitive information if not used correctly.

- Make sure that no forms saves data to files with sensitive data. The new versions of the forms modules save data to the database which is a much more secure approach

- Consider all folders in the solution file archive and consider if the folder can contain information that should not be publically available. If not, IIS security have to be set up to mirror that

Folders that should not be able to access by default:

- /Files/System/

Security scanning

The above security mechanisms are tested on regular basis (with every minor release) using the http://sqlmap.org/ tools to check for vulnerabilities.

SQLMAP is a penetration testing tool that automates the process of detecting and exploiting SQL injection flaws and taking over of database servers. It comes with a powerful detection engine, many niche features for full penetration testing capabilities and a broad range of switches lasting from database fingerprinting, over data fetching from the database, to accessing the underlying file system and executing commands on the operating system via out-of-band connections.

All of this is tested on a regular basis.